During Azure certification preparation and based on my project experience of the Azure Kubernetes Service (AKS), I wish to share several best practices for AKS deployment. In this post, I will walk you through the selection of appropriate options within AKS.

Networking models

AKS supports two networking models:

Kubenet networking – Azure manages the virtual network resources as the cluster is deployed and uses the kubenet Kubernetes plugin. Pods receive IP address from logically different address space to the Azure virtual network subnet of nodes. Then Network address translation (NAT) is configured for pods to reach resources in Azure virtual network. This is more of complicated introducing management overhead.

Azure CNI networking – Deploys into an existing virtual network, and uses the Azure Container Networking Interface (CNI) Kubernetes plugin. Microsoft recommends choosing CNI networking for production deployment.

Azure CNI networking assigns each Pod an individual IP address and can route to other network services including on-premises resources. When creating AKS via the Azure portal Kubenet networking (basic) is set as default, you need to select Advanced networking for the CNI model.![]()

When you use Azure CNI networking, the virtual network resource will be created in a separate resource group to the AKS cluster. The resource group will been given a default name, however you can set the name when deploying via ARM templates.

While selecting the CNI networking model, you must be cautious with respect to IP address management. If you exhaust all IP addresses in the target subnet you will need to redeploy the cluster to a new larger subnet as you cannot resize a subnet in Azure once resources are deployed to it. With CNI each pod will be assigned an IP address from the subnet, so you need to have some foresight into the number of nodes and pods that will be deployed to the cluster.

For example, if you select 3 nodes during AKS cluster creation using the CNI network model. Azure will expect to be able to allocate 90 IP addresses from the subnet for the maximum number of pods deployable. The reason is, the default number of pods per node is 30. So, 3 nodes * 30 pods per node equals 90 IP addresses allocated. The calculation is (N * Max pods for node)

In addition, AKS requires 3 IP addresses per node and kube-api requires 1 IP address, all allocated from the same subnet. The preferred subnet space for AKS is /24.

If reducing the number of pods per node in non-production clusters or increasing for a production you will need to use ARM templates or the Azure CLI. However, this can only be done at creation, on an existing cluster you will be required to create a new node pool and migrate the pods across.

The maximum number of pods per node in the Azure CNI model is 250. Below is an ARM Template snippet to set the maximum pods per node.

“agentPoolProfiles”: [

{

“name”: “agentpool”,

“maxPods”: “30”

}

Secure AKS application traffic via Application Gateway with Web Application Firewall (WAF)

Application gateway can be configured with ingress controller or Azure load balancer typically a kubernetes resources in AKS cluster. By introducing Application gateway, we are not consuming the some of the node’s resources such as CPU, memory, and network bandwidth.

Importantly, WAF can be introduced to scan incoming traffic to prevent attacks.

In addition, it is possible to implement SSL off-load and end to end TLS encryption with Application Gateway. This is achieved via configuring application gateway with end-to-end TLS communication mode. Application Gateway will terminate the TLS sessions at the gateway and decrypt user traffic before initiating a new TLS connection to the backend server, re-encrypting data using the backend server’s public key certificate before transmitting to the backend. Responses go through the reverse process back to the call initiator.

The below picture from Microsoft illustrates this flow:

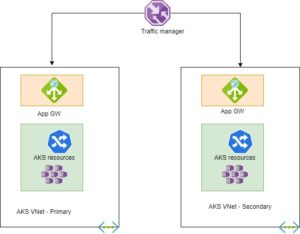

Use Traffic Manager to support multi-region deployment for DR

For disaster recovery (DR) protection from Azure regional outage, it is always recommended to plan carefully and deploy critical production systems into multiple regions and implement a global service such as Traffic Manager to support rapid failover in the event of disaster.

Plan to deploy Production AKS clusters in second Azure Region. There is no inbuilt direct synchronisation for AKS clusters deployed in separate regions. As part of application deployment, we must ensure both AKS clusters receive the latest code.

With Traffic Manager, we can configure the routing options to support Active/Active or Active/Passive clusters, a single public endpoint is exposed and accessible to our users via the internet as a DNS.

Scanning Container Images

Azure Security Center provides vulnerability management for Linux based images stored in Azure Container Registry (ACR). Whenever a new image is pushed to ACR, Security Center will perform a scan of the image with Qualys with notification when a vulnerability is detected.

Optional Uptime SLA now available for Azure Kubernetes Services (AKS)

This is another great update from Azure as of May 2020. AKS is a free container service that simplifies deployment, operations and management of Kubernetes as a fully managed orchestrator service. You pay for the resource consumption (compute, storage etc) of the cluster’s nodes, there is no charge for the cluster management.

The optional uptime SLA is a financially backed service level agreement (SLA) that guarantees an uptime of 99.95% for the Kubernetes API server for clusters that use Azure Availability Zones and 99.9% for clusters that do not use Azure Availability Zone.

To high level difference on up time SLA:

| Service | Free | Uptime SLA |

| API Server Availability | 99.95 % | 99.9% & 99.95 with Availability zone |

| SLA-backed | No | Yes |

| Price | Free | $0.10/hour |

I hope this article gives you some best recommendations for AKS cluster deployment.

Santhosh has over 15 years of experience in the IT organization. Working as a Cloud Infrastructure Architect and has a wide range of expertise in Microsoft technologies, with a specialization in public & private cloud services for enterprise customers. My varied background includes work in cloud computing, virtualization, storage, networks, automation and DevOps.