Introduction:

During a discussion with a colleague, he asked me about the significance of creating a private Azure Kubernetes Service (AKS) cluster. I explained to him that a private AKS cluster is essential for organisations to maintain a secure and isolated environment for their Kubernetes workloads. In a shared environment, there is a risk of unauthorised access, data breaches, and other security threats that can compromise the system’s integrity. After our conversation, a more in-depth blog post that delves into the topic would be helpful. The post will cover the technical details, advantages, and best practices for creating a private AKS cluster.

What is a Control Plane In AKS?

Establishing a managed Kubernetes cluster requires a significant level of technical expertise due to the various intricacies involved. However, Azure Kubernetes Service (AKS) simplifies this process by taking over the operational overhead, such as health monitoring and maintenance, as a hosted Kubernetes service. With AKS, you don’t have to worry about the complexities of setting up a Kubernetes cluster. Azure automatically configures the control plane, provided as a managed Azure resource at no additional cost and abstracted from the user. The control plane in Kubernetes manages apps and worker nodes, which users deploy. Azure fully manages this critical component, accessible over the internet by default and requires access by worker nodes and operators.

To summarise, Azure Kubernetes Service (AKS) simplifies the deployment of managed Kubernetes clusters by taking over the operational overhead, and users only need to pay for and manage the nodes attached to the AKS cluster.

If you’re new to containers and Kubernetes, check out my previous blog, where I discuss them in more detail.

- Part 1: Introduction to Docker, Containers, and Kubernetes

- Part 2: Deep Dive into Kubernetes

- Part 3: Containers Services in Azure.

An AKS cluster and its control plane require sturdy security measures to prevent unauthorised access and ensure the confidentiality of sensitive data. Organisations should implement authentication and authorisation protocols like Azure AD to accomplish this. These protocols guarantee that only authorised users can access the system and perform specific actions. Additionally, restricting access to specific IP ranges can further improve the security of the AKS cluster by thwarting unauthorised access attempts and keeping the system safe from external threats.

What are the different options with the AKS cluster in Azure?

AKS provides three different options for accessing the control plane:

- AKS Public cluster

- AKS cluster with API Server VNET integration.

- AKS Private cluster.

Option 1 – AKS Public cluster

When working with a public cluster, you can access the control plane using a public endpoint (FQDN) containing a unique identifier in the .hcp..azmk8s.io format. This endpoint will resolve to a public IP address and serve as a connection point for cluster operators and worker nodes. When users need to access the Kubernetes service, they can use the endpoint with a public IP address to connect to the control plane. To view the public endpoints, use the kubectl get endpoints command.

A public IP address is generated for egress traffic from worker nodes and pods when creating a cluster. It’s important to note that this IP address differs from the public endpoint for the cluster, which has a different IP address. The public IP address is exclusively used for outbound traffic from the cluster.

Moreover, AKS allows IP addresses to connect to the control plane, which authorises them to access the cluster control plane. The cluster can receive and process requests from the authorised IP addresses. The Azure portal and Azure CLI tools can achieve this.

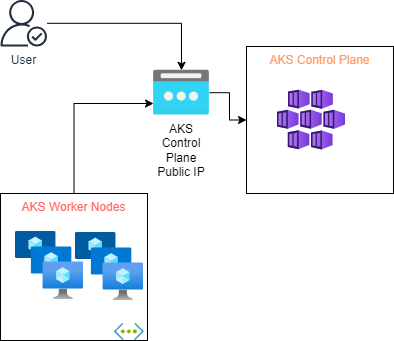

The diagram below will illustrate the access of the AKS control plane from the AKS workers node and user/applications for the AKS Public cluster (Default) option.

Option 2 – AKS cluster with API Server VNET integration

Azure Kubernetes Service (AKS) offers API Server VNet Integration, which enables secure and efficient network communication between the API server and the cluster nodes. This integration directly projects the API server endpoint into a delegated VNet subnet where AKS is deployed without requiring a private link or tunnel. As a result, network traffic remains within the private network, enhancing security. This feature is in preview while writing at the moment.

In addition, the API server is only accessible through an internal load balancer VIP in the delegated subnet. This setup enables secure and seamless communication between the API server and the cluster nodes, protecting against any security threats that may arise.

The API Server VNet Integration feature lets you easily manage your network traffic between the API server and node pools. This ensures that your data remains private and secure, guaranteeing reliable and secure network traffic management.

When using your Virtual Network (VNet) with AKS, you must create and delegate an API server subnet to Microsoft.ContainerService/managedClusters. This step will grant the AKS service the necessary permissions to inject the API server pods and internal load balancer into that subnet. You cannot use this subnet for any other workloads, but you can use it for multiple AKS clusters in the same virtual network. Please note that the minimum subnet size supported for the API server is /28.

Please ensure that the cluster identity has the necessary permissions for both the API server and node subnet, as a lack of permissions at the API server subnet can cause a provisioning failure.

You can choose between a public or private cluster using this option. Suppose you have configured your AKS cluster with API Server VNet Integration. In that case, you can quickly turn the public network access/private cluster mode on or off without redeploying the cluster. The API server hostname stays the same, but any public DNS entries are adjusted or removed as needed.

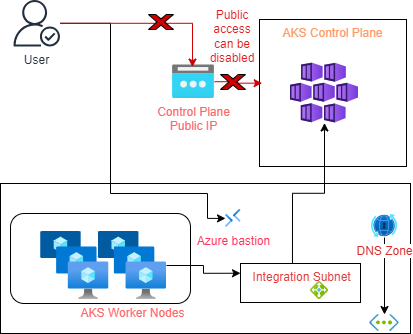

The diagram below illustrates how the AKS control plane can be accessed by the AKS worker nodes and user applications using the AKS API Server VNET integration option. In this setup, the worker nodes access the control plane through an internal load balancer hosted in the integration subnet. The integration subnet has a delegation with the AKS control plane. On the other hand, users can connect to AKS through a bastion (Jumpbox) and internal load balancer. The public access to the control plane can be disabled or enabled.

Option 3 – AKS Private cluster

When you create a private cluster, the control plane or API server has internal IP addresses defined in the Address Allocation for Private Internet document. Using a private cluster ensures that the network traffic between your API server and node pools remains on the private network only.

The control plane or API server is part of an Azure Kubernetes Service (AKS)-managed Azure resource group, while your cluster or node pool is in your resource group. These two components can communicate through the Azure Private Link service in the API server virtual network and a private endpoint exposed on your AKS cluster’s subnet.

When you provision a private AKS cluster, AKS automatically creates a private fully qualified domain name (FQDN), a private DNS zone, and an additional public FQDN with a corresponding A record in Azure public DNS. The agent nodes continue to use the A record in the private DNS zone to resolve the private IP address of the private endpoint for communication with the API server.

Azure offers the private cluster feature for organisations that want to take their security to the next level, eliminating the public endpoint. This allows the system to be hosted in a private network, safeguarding it from unauthorised external access attempts. This feature is ideal for organisations that handle sensitive data and must ensure their systems are secure from external threats.

A private AKS cluster provides a dedicated and isolated environment that enables organisations to implement security policies, controls, and compliance requirements. Furthermore, it offers benefits such as improved network performance, reduced latency, and better control over resource allocation.

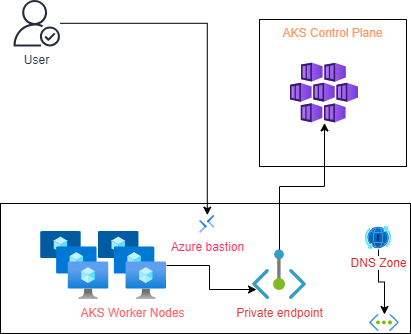

The following diagram explains how the AKS control plane can be accessed from the AKS worker’s nodes and user applications using the AKS Private cluster option. In this setup, the worker nodes access the control plane through a Private endpoint. In contrast, the users can access the AKS through a bastion (Jumpbox) and private endpoint.

Notes: It is important to note that our Zero Trust Network implementation requires a private cluster and does not expose any public endpoints online. The worker nodes connect to the control plane using a private endpoint, which ensures better security. However, there is room for improvement in accessing the cluster for DevOps pipelines and cluster operators. It is worth noting that private clusters can only be created during the initial setup and are not possible for existing clusters.

There are a few limitations to these options:

- IP-authorized ranges can’t be applied to the private API server endpoint.

- Cannot convert the existing clusters to private clusters.

- The Azure container registry should have a private link to work with the AKS private cluster.

Conclusion:

When selecting options for AKS (Azure Kubernetes Service), it is crucial to understand the customer’s specific requirements and use cases. Factors such as the size of the cluster, the level of security required, the workload type, and the level of control needed all play a significant role in determining the most suitable options.

For example, if the customer requires high-level security, they may opt for a private cluster instead of a public one. Therefore, carefully considering and evaluating these factors are essential in selecting the most appropriate options for AKS. This approach ensures that the customer’s needs are met effectively without compromising performance, security, or scalability.

Santhosh has over 15 years of experience in the IT organization. Working as a Cloud Infrastructure Architect and has a wide range of expertise in Microsoft technologies, with a specialization in public & private cloud services for enterprise customers. My varied background includes work in cloud computing, virtualization, storage, networks, automation and DevOps.