Containers and Kubernetes are the hottest topics in application modernization technologies. Let me explain the basics of containers and deep dive into Kubernetes components. This blog post is the first in a series that will be in three parts:

- Part 1: Introduction to Docker, Containers, and Kubernetes

- Part 2: Deep Dive into Kubernetes

- Part 3: Containers Services in Azure.

Let me begin with Docker. What is Docker?

Docker is a popular runtime environment for creating and building software inside containers. Docker is built on open standards and functions inside the most common operating environments, including Linux, Microsoft Windows, and other on-premises or cloud-based infrastructures. It uses Docker images (copy-on-write snapshots) to deploy containerized applications or software in multiple settings, from development to test and production.

Docker is an open platform for developing, shipping, and running applications. Docker lets you separate your applications from your infrastructure to deliver software quickly. With Docker, you can manage your infrastructure as you organize your applications. By taking advantage of Docker’s methodologies for shipping, testing, and deploying code quickly, you can significantly reduce the delay between writing code and running it in production.

Docker as technology is both a packaging format and a container runtime. Packaging is a process that allows an application to be packaged together with its dependencies, such as binaries and runtime. The runtime points to the actual process of running the container images

Docker is a Container Engine developed by Docker Inc organisation. Docker is designed in the Go programming language. Docker comes in 2 flavors:

- Community Edition and

- Enterprise Edition

The advantages of docker platforms are :

– Fast, convenient delivery of applications.

– Responsive scaling and deployment

– Running more workloads on the same hardware.

Docker containers share the underlying kernel. What does that mean? Let’s say we have a system with an Ubuntu OS installed with Docker. Docker can run any flavor of OS on top of it as long as they are all based on the same kernel – in this case, Linux. If the underlying OS is Ubuntu, Docker can run a container based on another distribution like Debian, Fedora, Suse, or Centos. Docker utilizes the underlying kernel of the Docker host, which works with all Oses above.

What is Container?

Containers are an abstraction at the app layer that packages code and dependencies together. Containers are implemented using Linux namespaces and cgroups. Namespaces let you virtualize each container’s system resources, like the file system or networking. Cgroups provide a way to limit the amount of resources like CPU and memory that each container can use.

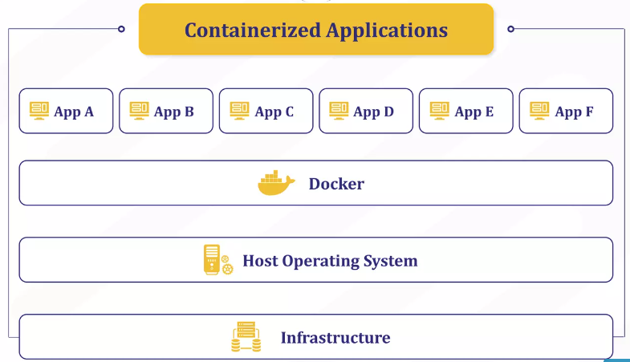

Docker provides the ability to package and run an application in a loosely isolated environment called a container. Containers are lightweight and contain everything needed to run the application, so you do not need to rely on what is currently installed on the host. The isolation and security allow you to run many containers simultaneously on a given host.

A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries, and settings.

Compare VM vs. Containers:

While comparing containers with virtual machines, Virtual machines are usually created and managed by a program known as a hypervisor, like VMware ESXi, Microsoft Hyper-V, and so on. This hypervisor program usually sits between the host operating system and the virtual machines to act as a communication medium.

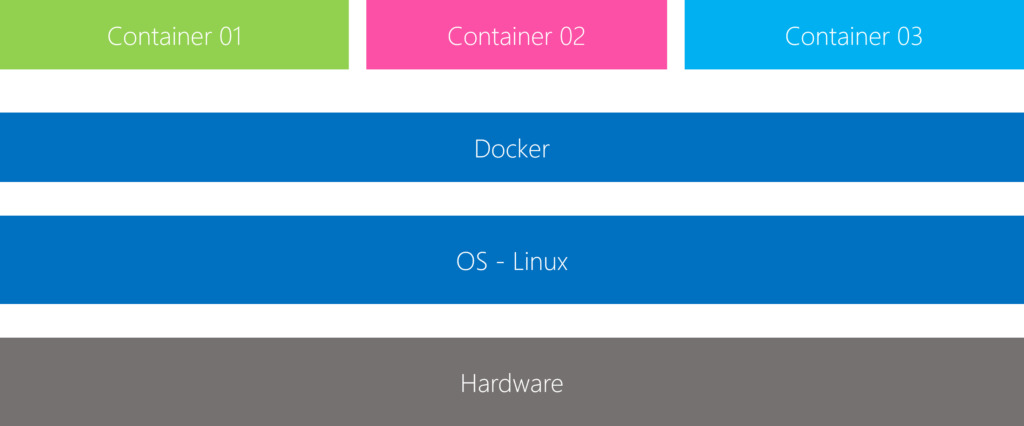

Docker’s container runtime sits between the containers and the host operating system instead of a hypervisor. The containers then communicate with the container runtime, which then communicates with the host operating system to get necessary resources from the physical infrastructure.

What is Kubernetes (K8s)?

Let’s focus on why we need Kubernetes. While running the containers in production without containers orchestration, we will have more manual tasks like:

- Scaling

- Fixing crashes

Kubernetes is an open-source platform to orchestrate and manage containerized workloads and services. Kubernetes handles various orchestration tasks, including discovery and load balancing, container storage orchestration, automatic container rollouts and rollbacks, container distribution, security, and container resilience processes, called self-healing.

While Kubernetes is not a container engine like Docker, Kubernetes must be able to manage and execute containers to create, control and maintain container cluster resilience. This critical ability to interact with various runtime components is handled through the Kubernetes CRI API. Kubernetes is thus a container orchestration technology. Kubernetes is supported by all public cloud service providers like GCP, Azure, and AWS, and the Kubernetes project is one of the top-ranked projects in GitHub. Multiple such technologies are available today – Docker has a tool called Docker Swarm.

K8s provides features to make container management, maintenance, and life cycle more manageable than using a container engine alone. The features are Automated rollouts & rollbacks—storage orchestration, service discovery, horizontal scaling, load balancing, secret management, self-healing, etc.

I trust you had a good time reading the blog post. That is the end of understanding the basic concepts required with Docker, Containers, and Kubernetes. The next part of this series will be published sooner.

Santhosh has over 15 years of experience in the IT organization. Working as a Cloud Infrastructure Architect and has a wide range of expertise in Microsoft technologies, with a specialization in public & private cloud services for enterprise customers. My varied background includes work in cloud computing, virtualization, storage, networks, automation and DevOps.